Searching 2

1. Brute-Force

Brute force algorithms are simple, exhaustive approaches to problem-solving. As the name suggests, these algorithms try all possible cases to find a solution. While inefficient in terms of time complexity—especially as problem size grows—brute force algorithms are valuable as a starting point for developing more sophisticated algorithms.

Characteristics:

- Easy to implement

- Explores all possible solutions

- Time complexity increases proportionally with problem size

- Serves as a baseline for algorithm optimization

2. Jump Point Search

Jump Point Search (JPS) can be described in terms of two simple pruning rules applied recursively during search: one rule is specific to straight steps, the other for diagonal steps. The key intuition in both cases is to prune the set of immediate neighbours around a node by trying to prove that an optimal path (symmetric or otherwise) exists from the parent of the current node to each neighbour and that path does not involve visiting the current node.

3. Linear Search

Linear search is the simplest search algorithm, comparing each element sequentially until the target is found.

Advantages:

- No need to sort data beforehand

- Simple to implement

Disadvantages:

- Time required increases linearly with data size

- Inefficient for large datasets

Example: To find a value in a dataset of 1 million elements, you may need up to 1 million comparisons in the worst case.

4. Power Set

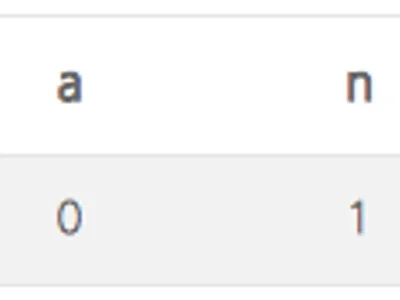

To list all subsets of {a, b, c, d, e, f}:

- List all subsets of {b, c, d, e, f} (excluding a)

- List sets that add {a} to all subsets of {b, c, d, e, f}

def power_set_1(set_):

def k_subsets(k, start_ind):

if k == 0:

return [[]]

else:

subsets = []

for ind in xrange(start_ind, len(set_) - k + 1):

for subset in k_subsets(k - 1, ind + 1):

subsets.append(subset + [set_[ind]])

return subsets

subsets = []

for k in xrange(len(set_) + 1):

for subset in k_subsets(k, 0):

subsets.append(subset)

return subsets5. Optimization Problem

An optimal algorithm is one where no other algorithm solving the same problem performs fewer major operations.

Steps to prove optimality:

-

Step 1: Devise a W(n) algorithm considered efficient for the given problem. Determine the workload needed in the worst case, where n represents the input data size.

-

Step 2: For function F, prove that any algorithm solving the problem requires a workload of at least F(n).

-

Step 3: If W(n) = F(n), then the algorithm is optimal for solving the problem.